Azure Datafactory

Integrating Data in Microsoft Azure

We will answer below question in this tutorial .

How to Create Data Integration PipeLines ?

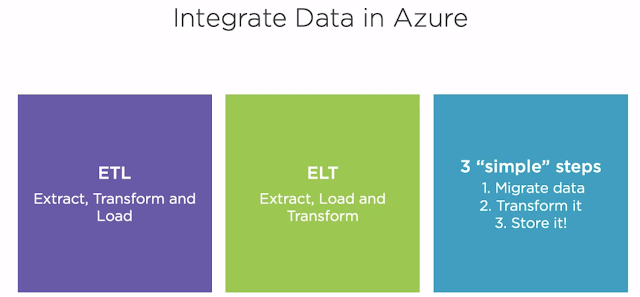

So first of all what is Data Integration ?

Answer : Processs of combining data from different sources into a meaningful and valuable information.

Azure DataFactory:

Azure Data Factory, a hybrid data integration service that simplifies ETL at scale. It enables modern data integrations in the cloud by providing visual tools to integrate data sources using more than 80 connectors that allow you to move, transform, and save data between diverse data sources. We discuss and learn a lot about Azure Data Factory in the upcoming modules. Data engineers and developers often need to move data from on-premise data sources to Azure. Azure Data Factory allows us to accomplish this goal, but while Azure Data Factory excels at that, it's not the only tool capable of doing so.

Data Migration Assistant:

Data Migration Assistant is another tool that can help us with on-premise data migration. We use data migration assistance to migrate schema and data from an on-premise SQL Server to an Azure SQL database. Data migration assistant is also great at detecting compatibility issues between two data sources

Azure Database Migration Service:

For large migrations though, both in terms of database quantity and size, Microsoft recommendation is to use the Azure Database Migration Service, a fully managed service designed to migrate database at scale with minimal downtime.

Azure DataFactory Copy Data Tool:

Copy Data tool allows data engineers and developers to easily and quickly create data pipelines to move data between different services. We use this convenient tool to move data between Azure Blob Storage containers while most of the time we pull data from data stores. There are times when this information comes pushed to us streamed by devices or processes. In this course, we'll learn how to process real-time data by leveraging the power of Azure EventHubs to ingest data in real time.

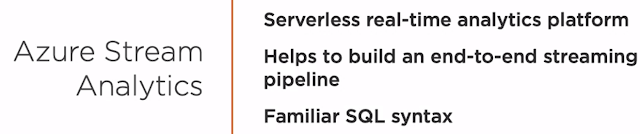

Azure Stream Analytics :

However, moving and storing data is just part of the equation.

We also need to transform, reshape, and analyze this data, and to do that, we have excellent services in the Azure ecosystem. Azure Stream Analytics is a serverless real-time analytics platform that enable us to build end-to-end streaming pipelines. We'll work with this service to develop a real-time pipeline that ingest real-time events, transforms this data, stores it, and delivers the process data to a Power BI report for the analysis.

Azure Databricks:

We also have Azure Databricks in processing stage. It is a fast, easy, and collaborative Apache spark-based analytics service. We use Azure Databricks to transform data between different formats and to enrich the before mentioned data and save it to an Azure SQL database.

Azure DataFactory and its Components:

Azure DataFactory Pipelines:

DataFactories can contain one or more Pipelines.

So what is Pipeline ?

Pipeline is logical grouping of activities and it allows managing activities as a set and you can have as many activities as you can in a pipeline.

So What is Activity?

Activity represents a processing step in Pipeline i.e they are actions to perform on data.

For Example below can be activities:

1.Ingest Data

2.Transform Data

3. Store Data

These activities can be linked together so that they can execute sequentially or prallel.

What are the types activities ?

They are 3 types as follows :

Data movement and Transformation Activites assist us in copying and transforming data

Data Control assist us to manage the flow of control

In Data Movement Activity we have Copy Data Activity which will will help in copying data amongst data stores located on-premises and in the cloud.

Data Movement Activity connectors : i.e it connect all these Data Stores and fetch the data.

Similarly Data transformation activites support some of the below transformation activities include

1.Hive

2.Pig

3.Map Reduce

4.Spark

5.Azure DataBricks

Control Activites As the name indicates allows us to control flow of activities and some of the control activities include

1. ForEach

2.Web Activities

3.Filter

For more Info : visit https://docs.microsoft.com/en-us/azure/data-factory/concepts-pipelines-activities

DataFactory Datasets :

So using DataMovement activity we are copying data from a source by connecting to it. This source is nothing but Dataset.

This source can be anything of Database,blobs,etc.. For ex:

1.Files of blobs

2.Folders of blobs

3.Documents

4.Tables of db

Linked Service:

So to copy a data from Source we are connecting to it. This connection string is nothing but Linked Service.

How to Create Data Integration PipeLines ?

So first of all what is Data Integration ?

Answer : Processs of combining data from different sources into a meaningful and valuable information.

Azure DataFactory:

Azure Data Factory, a hybrid data integration service that simplifies ETL at scale. It enables modern data integrations in the cloud by providing visual tools to integrate data sources using more than 80 connectors that allow you to move, transform, and save data between diverse data sources. We discuss and learn a lot about Azure Data Factory in the upcoming modules. Data engineers and developers often need to move data from on-premise data sources to Azure. Azure Data Factory allows us to accomplish this goal, but while Azure Data Factory excels at that, it's not the only tool capable of doing so.

Data Migration Assistant:

Data Migration Assistant is another tool that can help us with on-premise data migration. We use data migration assistance to migrate schema and data from an on-premise SQL Server to an Azure SQL database. Data migration assistant is also great at detecting compatibility issues between two data sources

Azure Database Migration Service:

For large migrations though, both in terms of database quantity and size, Microsoft recommendation is to use the Azure Database Migration Service, a fully managed service designed to migrate database at scale with minimal downtime.

Azure DataFactory Copy Data Tool:

Copy Data tool allows data engineers and developers to easily and quickly create data pipelines to move data between different services. We use this convenient tool to move data between Azure Blob Storage containers while most of the time we pull data from data stores. There are times when this information comes pushed to us streamed by devices or processes. In this course, we'll learn how to process real-time data by leveraging the power of Azure EventHubs to ingest data in real time.

Azure Stream Analytics :

However, moving and storing data is just part of the equation.

We also need to transform, reshape, and analyze this data, and to do that, we have excellent services in the Azure ecosystem. Azure Stream Analytics is a serverless real-time analytics platform that enable us to build end-to-end streaming pipelines. We'll work with this service to develop a real-time pipeline that ingest real-time events, transforms this data, stores it, and delivers the process data to a Power BI report for the analysis.

Azure Databricks:

We also have Azure Databricks in processing stage. It is a fast, easy, and collaborative Apache spark-based analytics service. We use Azure Databricks to transform data between different formats and to enrich the before mentioned data and save it to an Azure SQL database.

Azure DataFactory and its Components:

Azure DataFactory Pipelines:

DataFactories can contain one or more Pipelines.

So what is Pipeline ?

Pipeline is logical grouping of activities and it allows managing activities as a set and you can have as many activities as you can in a pipeline.

So What is Activity?

Activity represents a processing step in Pipeline i.e they are actions to perform on data.

For Example below can be activities:

1.Ingest Data

2.Transform Data

3. Store Data

These activities can be linked together so that they can execute sequentially or prallel.

What are the types activities ?

They are 3 types as follows :

Data movement and Transformation Activites assist us in copying and transforming data

Data Control assist us to manage the flow of control

In Data Movement Activity we have Copy Data Activity which will will help in copying data amongst data stores located on-premises and in the cloud.

Data Movement Activity connectors : i.e it connect all these Data Stores and fetch the data.

Similarly Data transformation activites support some of the below transformation activities include

1.Hive

2.Pig

3.Map Reduce

4.Spark

5.Azure DataBricks

Control Activites As the name indicates allows us to control flow of activities and some of the control activities include

1. ForEach

2.Web Activities

3.Filter

For more Info : visit https://docs.microsoft.com/en-us/azure/data-factory/concepts-pipelines-activities

DataFactory Datasets :

So using DataMovement activity we are copying data from a source by connecting to it. This source is nothing but Dataset.

This source can be anything of Database,blobs,etc.. For ex:

1.Files of blobs

2.Folders of blobs

3.Documents

4.Tables of db

Linked Service:

So to copy a data from Source we are connecting to it. This connection string is nothing but Linked Service.

Post a Comment

0 Comments